Crypto traders woke up to a strange sight on 18 November 2025. Charts refused to load, trading terminals froze, and familiar dashboards returned blunt error messages instead of live prices. The problem did not come from any blockchain.

- Outage shows how fragile “decentralized” apps can be

- Decentralization has focused on the wrong half of the stack

- What End-to-End decentralization actually means

- Why this matters for crypto key indicators

- Builders racing toward a more resilient stack

- Conclusion: from slogan to standard

- Frequently Asked Questions

- Glossary of key terms

It started inside a single internet infrastructure provider that handles a large share of global web traffic. When that provider went offline, many crypto exchanges, block explorers and DeFi tools disappeared from the web in an instant. For a sector that prides itself on resilience, the outage was a loud reminder that marketing claims often run ahead of reality.

In the days that followed, a phrase kept surfacing in technical circles: end-to-end decentralization. The idea is simple, but the implications are large. A protocol is not truly resilient if only the consensus layer is distributed while critical pieces of the stack still depend on a handful of centralized companies. This incident has turned end-to-end decentralization from a niche ideal into a practical survival strategy for serious crypto projects.

Outage shows how fragile “decentralized” apps can be

During the November outage, a configuration error inside a major internet infrastructure company triggered widespread 500 errors across the web. Platforms that rely on this provider for routing and security, including social networks, productivity tools, trading services and gaming platforms, became unreachable for hours in several regions. Analysts estimate that around one fifth of all websites experienced some level of disruption, and that large crypto venues such as exchanges, price trackers and DeFi front ends were among those affected.

For on chain purists, this created an uncomfortable truth. Assets continued to exist on their respective networks, but users could not move or even view them because access still depended on centralized web infrastructure. In practice, these systems were decentralized at the ledger layer, but not at the level of real user experience. End-to-end decentralization asks a harder question: can users still transact, see balances and verify data when any single company fails. The outage showed that, for many projects, the honest answer is still no.

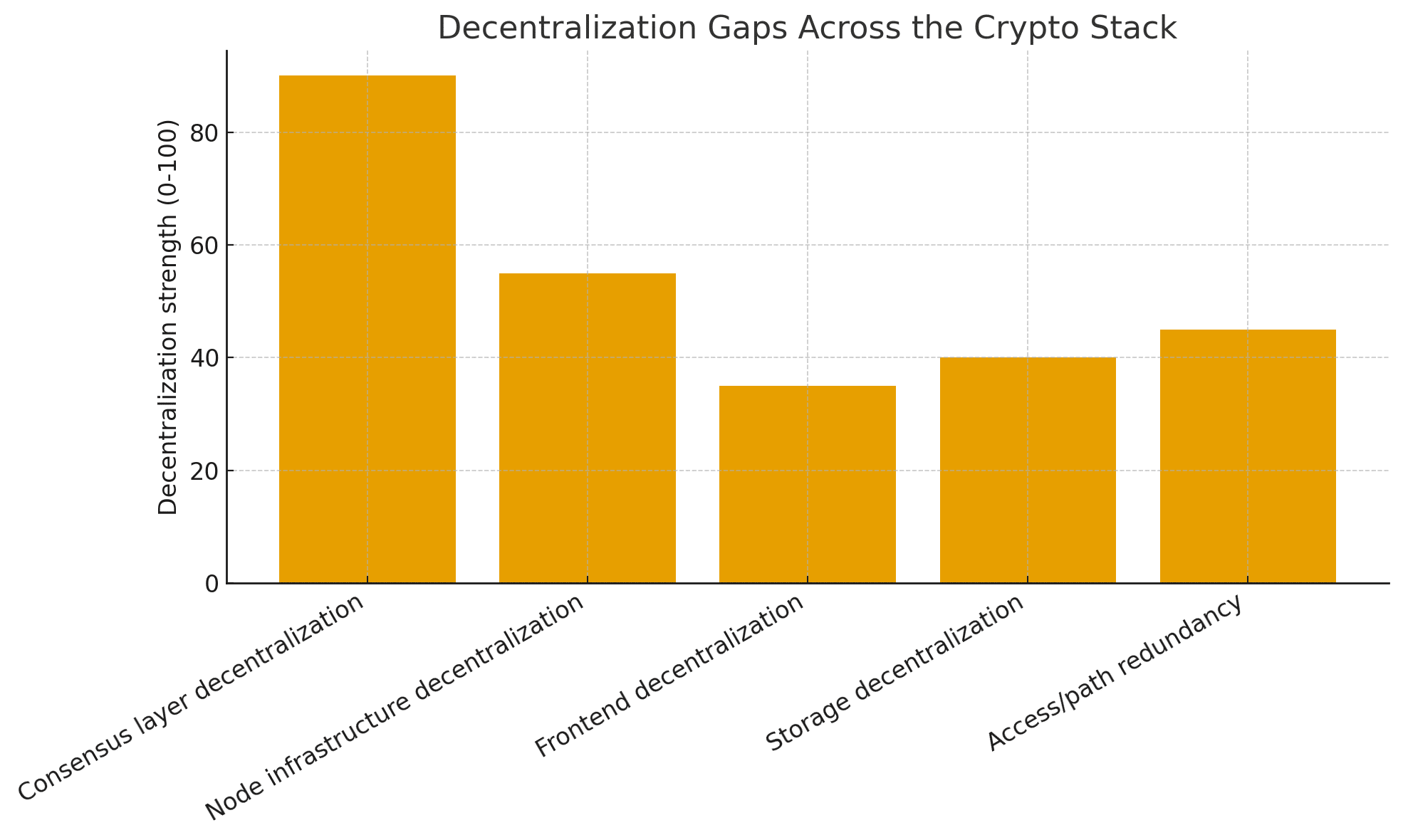

Decentralization has focused on the wrong half of the stack

For the past decade, most of the intellectual energy in crypto has gone into decentralizing consensus. Developers refined proof of stake, widened validator sets, and hardened smart contract platforms. Those gains matter. They reduce the chance that a single operator can censor transactions or rewrite history.

At the same time, many teams quietly leaned on Web2 shortcuts for everything around the chain. Node infrastructure moved to a small group of large hosting providers. Application programming interfaces that feed wallets and interfaces are often run by a few high volume gateway services. Domain names sit in traditional registries. User interfaces live on centralized content delivery networks. In good times, this setup is fast and convenient. In a stress event, it becomes a chain of single points of failure.

Architects who push for end-to-end decentralization argue that a blockchain does not fully defend user rights if access, data storage and coordination still depend on trusted intermediaries. Apps that look censorship resistant on paper can become inaccessible in practice when a provider turns off an account, a region faces sanctions pressure, or a software bug takes down a core network service.

What End-to-End decentralization actually means

End-to-end decentralization begins with the familiar ingredients of a healthy blockchain. There is a widely distributed validator or miner set, clear economic incentives, open source code and transparent governance. On top of that, the data that applications rely on is stored on systems that cannot be quietly altered or deleted by a single vendor, and user access routes are diversified so that no one provider holds the keys to the front door.

In practice, this approach calls for several design choices. Front ends can be served from decentralized storage networks or bundled as installable clients rather than hosted exclusively on a corporate content network.

Transaction routing can move through multiple independent node operators instead of one large gateway. Domain resolution can use human readable blockchain names alongside, or even instead of, traditional registries. Each of these choices costs time and sometimes user convenience, but each one moves the protocol closer to end-to-end decentralization.

Thought leaders in the ecosystem have warned about these issues for years. Recent manifestos from core developers talk about trustless infrastructure that removes as many social trust assumptions as possible from the path between a user and the chain. End-to-End decentralization is the concrete expression of that vision. It is less a slogan and more a checklist that asks how many layers of the stack were designed to survive both corporate failure and political pressure.

Why this matters for crypto key indicators

Investors and builders often talk about market cap, total value locked and trading volume. Those indicators are useful, but they can hide structural risk. After the outage, a new set of questions began to surface. How geographically distributed are validators. How many independent paths exist between a user and the network. What percentage of traffic flows through a single infrastructure vendor. These are all part of a more mature playbook that treats End-to-End decentralization as a core indicator in itself.

Projects with deeper decentralization across storage, access and execution tend to show more consistent liquidity during stress events, because markets do not freeze when a data center goes dark. They are more resistant to censorship, since there is no easy choke point for regulators or attackers to target. They also inspire more confidence from institutional users who must assess operational risk, not only price charts. In that sense, End-to-End decentralization becomes a driver of long term value, much like strong balance sheets matter in traditional finance.

From a policy perspective, regulators are also beginning to look past labels and ask how autonomous a protocol really is. If front ends and critical data live entirely on centralized servers, authorities may treat the project more like a conventional financial service. If, on the other hand, End-to-End decentralization is visible in the architecture, the discussion shifts toward how users themselves hold responsibility for their own actions on a neutral settlement layer.

Builders racing toward a more resilient stack

New infrastructure projects are already trying to close the gap. Decentralized storage layers designed for smart contracts now offer petabyte scale capacity on top of existing networks, with costs that aim to be a fraction of traditional on chain storage. Some teams have launched modular storage rollups that use recent data availability upgrades to make it practical to keep application logic and user data fully on chain rather than parked on a centralized server.

One example is a storage focused layer 2 often highlighted by Ethereum researchers. It provides programmable key value storage secured by the base chain while data itself is held by a distributed network of storage providers. The design targets petabyte-scale capacity and claims the ability to reduce storage costs by orders of magnitude compared with standard contract storage operations. This type of system allows fully on chain games, social feeds and even media platforms where both logic and content can survive the loss of any single hosting company.

At the same time, teams that build wallets, rollups, and decentralized applications are exploring multi-provider routing, client-side interfaces, and new naming standards. Their goal is to turn end-to-end decentralization into something that users feel as reliable rather than notice only in marketing copy. Instead of one outage halting trading and lending across half the industry, failures would be isolated, and alternative paths to the chain would remain open.

The November incident delivered an unplanned real world test of these ideas. Crypto platforms that had diversified their infrastructure stack reported shorter interruptions and faster recovery, while sites that depended on a single infrastructure vendor remained unreachable for longer stretches of the trading day. End-to-end decentralization did not prevent the bug that caused the outage, but it clearly separated projects that could route around the problem from those that had to simply wait for a fix.

Conclusion: from slogan to standard

Crypto was born from a desire to remove single points of failure from money and information. Over time, convenience pulled many builders back toward familiar Web2 infrastructure. The recent outage has made that tradeoff impossible to ignore. End-to-end decentralization is emerging as the standard that separates protocols that are merely distributed on paper from systems that can keep working when something important breaks.

For traders, developers and regulators, the next phase of growth is likely to reward projects that treat decentralization as a full stack design principle rather than a checkbox on a pitch deck.

Frequently Asked Questions

Q1. If blockchains did not go down, why did the outage matter for crypto.

The outage mattered because users interact with crypto through front ends, APIs and wallets that sit on traditional web infrastructure. Those components failed, so traders could not submit orders, withdraw funds or even check balances.

Q2. How can a project move toward full stack decentralization without ruining user experience.

A practical approach starts with redundancy. Teams can use more than one infrastructure provider, add client side signing, and mirror interfaces on decentralized storage networks. Over time, they can integrate blockchain-based naming, open-source front ends, and community-run gateways.

Q3. Does a focus on deeper decentralization affect traditional crypto indicators like price and liquidity.

In the short term, markets often focus on hype cycles and macro news. Over a longer horizon, better decentralization can support healthier liquidity and more stable participation, because platforms are less likely to freeze during stress events.

Glossary of key terms

Blockchain consensus

The process by which nodes in a network agree on the current state of the ledger. Strong consensus mechanisms reduce the risk of double spending and censorship.

Data availability (DA)

A property that ensures transaction data and state updates are published in a way that other nodes can download and verify. Recent DA upgrades have made it cheaper to store large amounts of data that smart contracts can rely on.

Decentralized storage

A system where files or application data are distributed across many independent providers rather than stored on a single company server. Incentive mechanisms and cryptographic proofs are used to ensure that data remains available and unaltered.

Front end

The user interface of a crypto application, such as a website or wallet screen. Even when smart contracts are decentralized, a centralized front end can still act as a choke point if it goes offline or blocks certain users.

Validator set

The group of entities that propose and confirm blocks in proof of stake networks. A large, geographically and institutionally diverse validator set is one of the core indicators of a healthy, resilient blockchain.

Node infrastructure provider

A company or collective that runs full nodes and exposes them through APIs for applications and wallets. Heavy dependence on a small set of providers can undermine the practical benefits of decentralization.